CULTIVATING FREQUENCIES

MAX/MSP

ARDUINO

PCB DESIGN (Eagle)

MAX/MSP

ARDUINO

PCB DESIGN (Eagle)

Cultivating Frequencies transforms a garden into an interactive and generative musical installation by analyzing data from the garden and translating them into musical components.

Cultivating Frequencies was created in collaboration with the Environmental Masters architecture class at Art Center College of Design, in which I was hired as a contractor to lead the musical, electronic, and interactive development. In fact, Cultivating Frequencies was a small portion of the larger Stgilat Pavilion project which was a collaboration between the architecture firm Cloud 9 and the Art Center College of Design’s Environmental Design program.

I was tasked with creating a generative musical system that would collect environmental data from the piece’s location and the hydroponic gardening system that would maintain the garden. The data available to me was temperature, humidity, soil moisture, PH, and time/date. Early in the design process, we wondered whether or not more interactivity could be integrated into this autonomous garden. We wondered if, in fact, the plants themselves could be transformed into touch sensors. With a small amount of research using a simple touch capacitive circuit and a wire buried in soil, we found that it was possible (see proof of concept video below).

After this development, the interaction became a key component of the system. Embodying the symbiotic relationship between human and garden by encouraging the user to not only view the garden but to engage it directly through touch. Another layer of user interaction was integrated as a sort of control, so the user’s presence would trigger the musical component using weatherproof ultrasonic sensors.

With the agreed-upon data and user interactions, I set out to design a generative musical system that not only created a pleasing experience but also aimed to communicate the status and health of the garden through sound. As I gathered sample data and plotted it out that the name and conceptual foundation of the piece came to be. Cultivating Frequencies was my way of expressing the connection between the data, sound, and the act of gardening, which all center around the notion of frequency. If you look at temperature data one point at a time all you have is a single data point without context. If you look at the same data by the hour over many days, specifically plotted on a graph, you get a waveform. In the context of gardening, there are also many cycles that happen. Watering, repotting, fertilizing, harvesting, etc, all have a specific frequency at which they occur. So the heart of this piece was trying to connect those ideas together by building in this idea of different frequencies and cycles to generate music that would change hourly, daily, monthly, and yearly. See the diagram below for an overview of the generative musical system.

Alongside the design of the generative musical system, I also did a lot of research about how to get more accurate and useful data from the plants as touch sensors. During my research I found the work being done by Disney R&D to create more intelligent touch sensors by a process called Swept Frequency Capacitive Sensing. While the implementation details of their work were proprietary, Mads Hobye , with the help of Nikolaj Møbius, was able to bring a similar system to the Arduino micro-controller. With the permission of the author, I worked on an open-source library, called SweepingCapSense, that would allow the use of this type of touch sensing for many inputs on an Arduino compatible micro-controller in a much easier library format. My effort in this area was published at the 2014 International Conference on New Interfaces for Musical Expression where I gave a talk and demonstration of the work.

The last major component of the work was creating a custom PCB shield for an Arduino Mega that would allow for the use of 16 simultaneous SweepingCapSense sensors, four ultrasonic sensors, and a PH sensor. Using Eagle PCB CAD software, I designed and printed the board for this project. I designed the PCB to specifically work with an enclosure that would allow for easy assembly on site, using audio connectors (8mm headphone and XLR jacks) to connect the sensors to the PCB.

Also, please check out the very nice write up The Creators Project did on this project.

THE THIRD ROOM

C++

CINDER

KINECT

PROCESSING

C++

CINDER

KINECT

PROCESSING

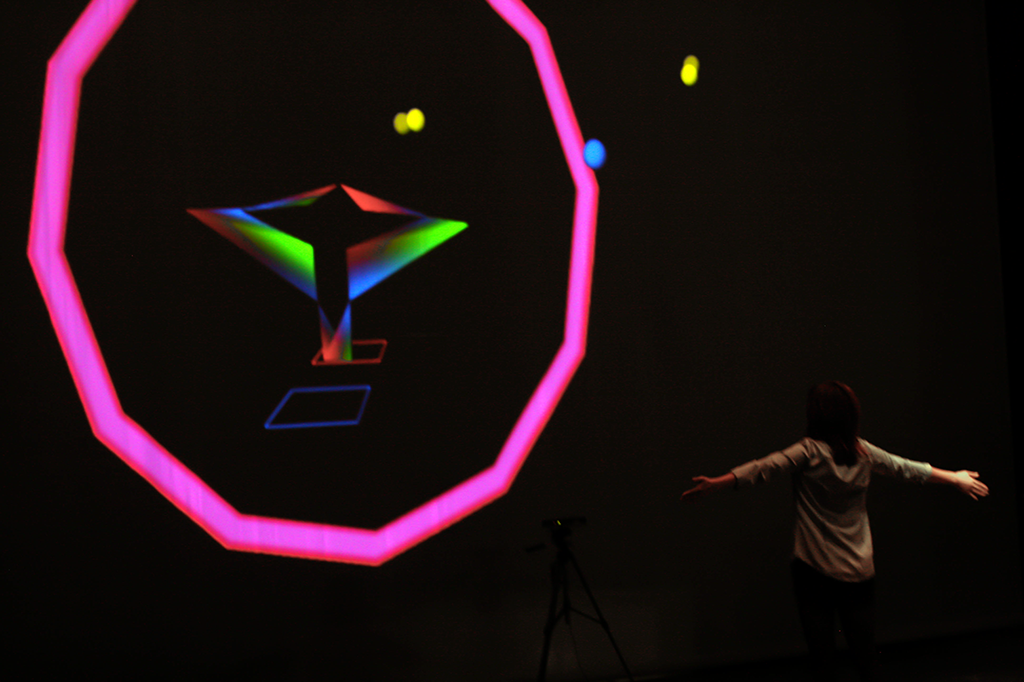

At its core, The Third Room is an interactive musical environment that transforms a physical space into an augmented musical performance space.

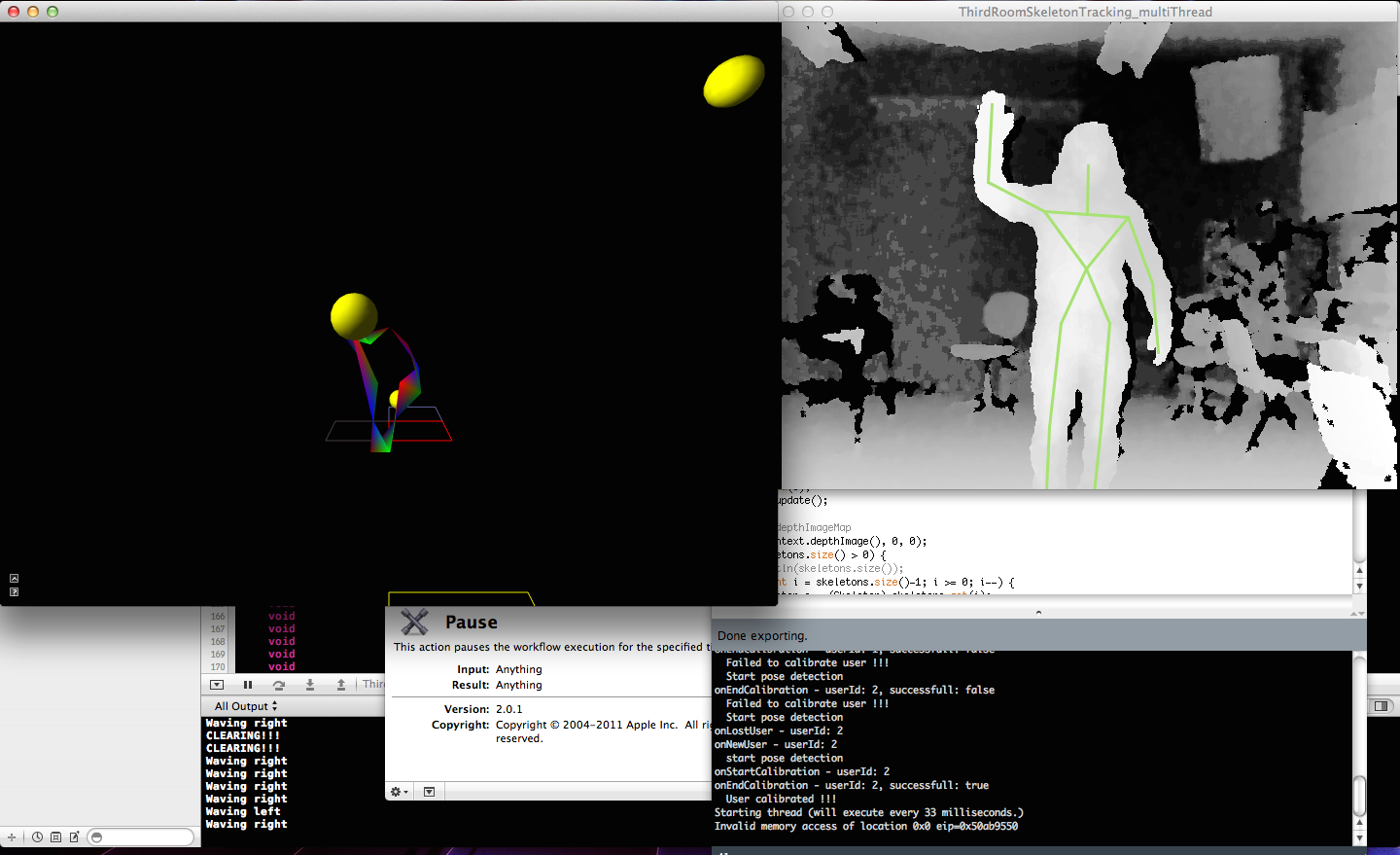

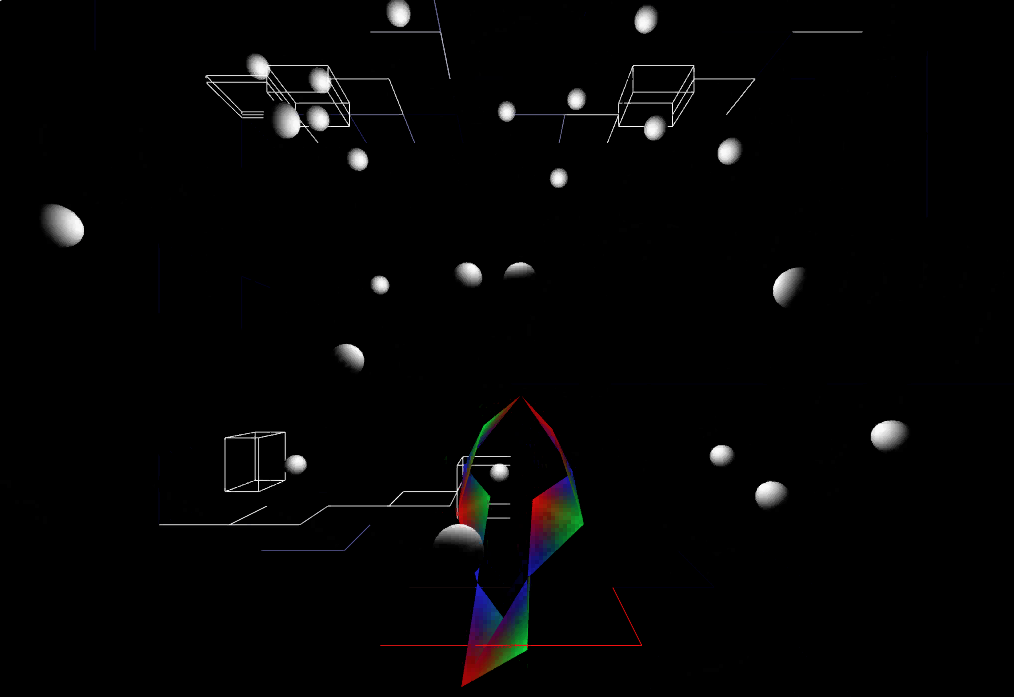

When a user enters The Third Room their body is detected by the Kinect’s depth camera. Their skeleton data is used to track their movement through the physical environment, displaying their position in the projected virtual environment and enabling virtual modes of interaction.

In the first iteration (see video below), the virtual room was pre-populated by different kinds of objects that the user could interact with, using their own body as the interface. However, it was found that users had a difficult time finding these objects given the perceptual difference of navigating a physical space while looking at a mirrored virtual space. In the final iteration, the users have control of object creation and destruction through various gestures. The virtual objects interact differently with the user and the environment, depending on their type, and each has its own sonic characteristics and/or affects the sound of other objects.

The Third Room - First Iteration

The “Ball” is an object that a user creates by waving. It can be thrown and caught, and holds a note value that is triggered when it hits the walls of the room. The “Blob” is an object that is created when two or more users group together in close proximity. When they do, a bass note is triggered as an amorphous shape envelops their avatars, and the parameters of the tone are randomly assigned to the different joints making up the shape. The “Box” makes up the virtual environment itself and has various interaction possibilities. The boxes can be turned on and off like buttons, transforming the entire virtual room into a step sequencer allowing for a different level of compositional control. The “Taurus” is created when a user brings there hands together and grows bigger, encircling their avatar, as they pull their hands apart, as seen in some of the pictures below. The Taurus controls the decay of tones created by the Ball objects, allowing the user to dramatically change the composition.

The user creates these different objects and through their interaction they create a composition that is part generative and part improvised performance. The physical and virtual congruence is increased with the addition of robotic elements that are installed above the user, so as the user reaches into the virtual world, the virtual world then reaches back out into the physical. Also present in the physical room are a pair of interfaces that allow for a multi-modal interactive and immersive experience.

Conceptually, The Third Room is a step toward re-imagining how we approach the creation and performance of digital music. In a virtual environment the same laws that govern the creation of physical musical instruments do not apply. In the Third Room, instruments are created and destroyed; they are manipulatable and autonomous; they are real and they are not real. The Third Room attempts to realize a future space, for performance or composition, that exists both physically and virtually, integrating virtual natural interaction with touch and feel.

This piece has been displayed at the CalArts Digital Arts Expo, the Newhall Art Slam, and the 2013 Internation Conference on New Interfaces for Musical Expression in Daejon, South Korea. This piece was also published as a paper at the 2013 NIME conference.

PATTERNS OF MINIMAL OCCURRENCE

PYTHON

OPENCV

PROCESSING

PYTHON

OPENCV

PROCESSING

Inspired by the naturally occuring metamorphic patterns of mineral deposits, Patterns of Minimal Occurence is an exploration through collaborative interaction using analog tools, with a digital output.

The Interaction:

Participants approached a desk set up to mimick a geologist’s workstation. In the center of the desk, they were presented with an empty notebook and instructions to enter a “sample” by creating an image using only the simple tools provided. These consisted of simple, geometric-shaped rubber stamps and a stamp pad. Users would then create their sample and then press a single button to capture a photo from the webcam that was mounted above the notebook.

The Process:

The image was then passed into a Python program using OpenCV, which would process the image before performing shape and contour detection. Each individual shape was sent to Processing via OSC where it was transformed into a rigid body shape and dropped into the screen from above. These bodies were a combination of all the shapes detected in the image and the color was based on how many sides each component shape had. As the shapes fall, they collide with each other and their environment, eventually coming to rest at the bottom of the screen. After, several collisions with other shapes, the rigid bodies broke apart into their component shapes and became permanent and immovable. With each sample entered into the system, the environment grows creating complex patterns of the component shapes and colors that even look like mineral deposits. Eventually, the pattern will fill the entire screen, which took several hours, at which time the piece is over.

Participants were encouraged to sign the back of their drawing for archival purposes. In addition, the images captured by the webcam were uploaded and recorded to a tumblr site, as were the images of the digital output shortly after the new shapes were introduced.

Below are some timelapse videos of the input and output of the system.

The Input

As seen in the video, the participants found ways to stretch their resources by going beyond the instructions of just using the stamps and used everything on the table to create their sample. From creating unique textures and very complex shapes to sometimes very minimal, simple shapes, and drawing things with the pencil. Some even used the mineral samples and rocks that were intended for display purposes only.

The complexity of the shapes contributed to the dominant color purple, used for shapes with 10 sides or more.

The Output

This project was not only an experiment based on our own fascination with the metamorphic process in minerals, but also an observation of how people interact with a very tactile and mostly analog interface. We were curious at the outcome of providing these very limited resources—a piece of paper, and a few drawing tools, with a single digital action of pressing “capture”—to create an original image without little need for technology.

Patterns of Minimal Occurence was a collaboration between myself and my partner Jessica De Jesus, created for the 2016 CalArts Digital Arts Expo. Jess art directed and designed the look and and feel of this project, both the analog set up and the digital environment, while I developed the programming. Thank you Jess for your insight, thoughtfulness, and eye for detail that transformed this project into a beautiful experience.

THE CIRCUITRY OF LIFE

REAKTOR

PYTHON

CHUCK

ABLETON LIVE

TOUCHDESIGNER

REAKTOR

PYTHON

CHUCK

ABLETON LIVE

TOUCHDESIGNER

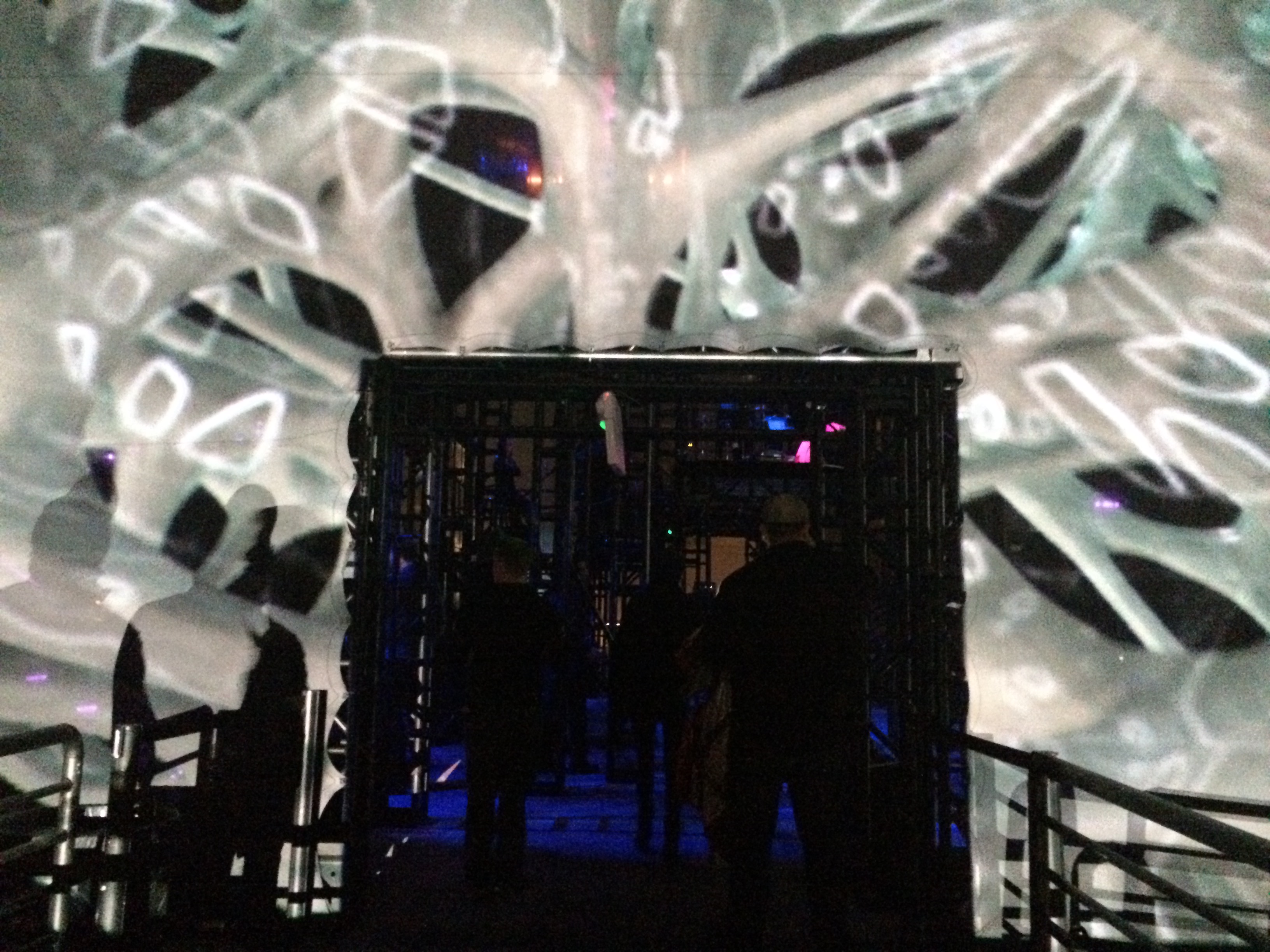

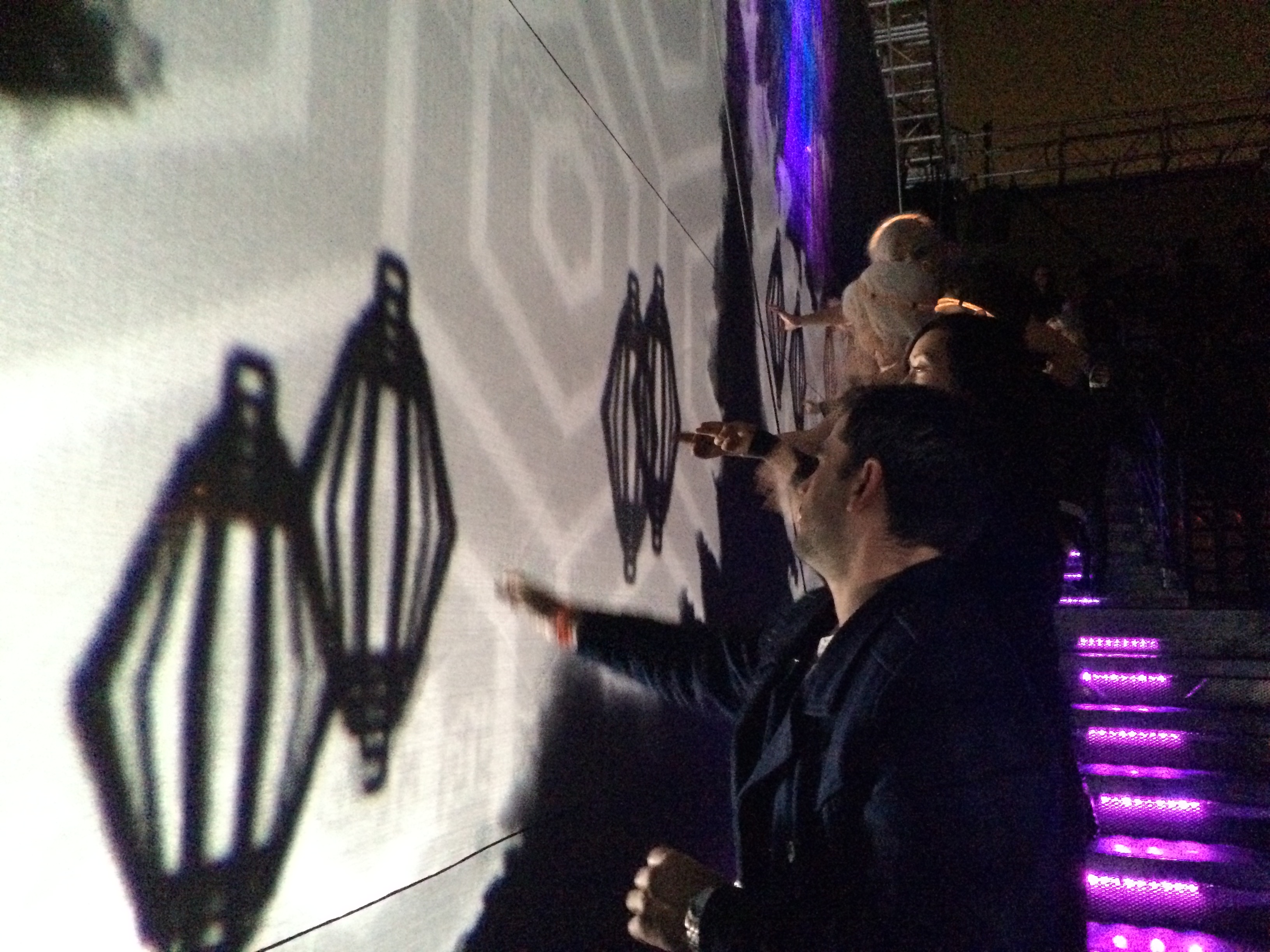

Red Bull at Night presents Heather Shaw’s “The Circuitry of Life” was a two-night event that took place on a rooftop in downtown Los Angeles and featured an immersive club experience that explored the evolution of music and imagined a future of interactivity and immersion.

Together with Jordan Hochenbaum and Owen Vallis—working together as HVHCRAFT—I helped design several interactive experiences that transformed the projection mapped cube environment into different musical instruments playable by an entire crowd of people. We exclusively worked on the interaction design for the musical elements of the experience as well as the sound design. The visuals and AV were provided by Eye Vapor and VT Pro Design.

Together with Jordan Hochenbaum and Owen Vallis—working together as HVHCRAFT—I helped design several interactive experiences that transformed the projection mapped cube environment into different musical instruments playable by an entire crowd of people. We exclusively worked on the interaction design for the musical elements of the experience as well as the sound design. The visuals and AV were provided by Eye Vapor and VT Pro Design.

I created several instruments that utilized different modes of interaction to create percussive and melodic sounds parsing the location data sent to us to localize the sound to work with the 32 speaker array surrounding the audience inside the three-story cube. Because the experience was a temporary one to be held on a rooftop we would not have a chance to test it with a full crowd so I wrote a custom Python script to replay recorded touch data so that we could develop the interactions using data from over 50 testers. This script sent touch data to TouchDesigner where it would be parsed and sent to our sound design rig, which included custom Reaktor synthesizers, ChucK for parsing and re-routing OSC, and Ableton Live to control playback and routing to the massive speaker array.

Big thank you to my collaborators Jordan Hochenbaum and Owen Vallis, Heather Shaw and Alexander Kafi for bringing us on, and the immensely talented crew of designers, animators, sound technicians, and musicians that all made this one of the most unique experiences I’ve ever had.

WE ARE ALL SNOWFLAKES

P5.JS

P5.JS

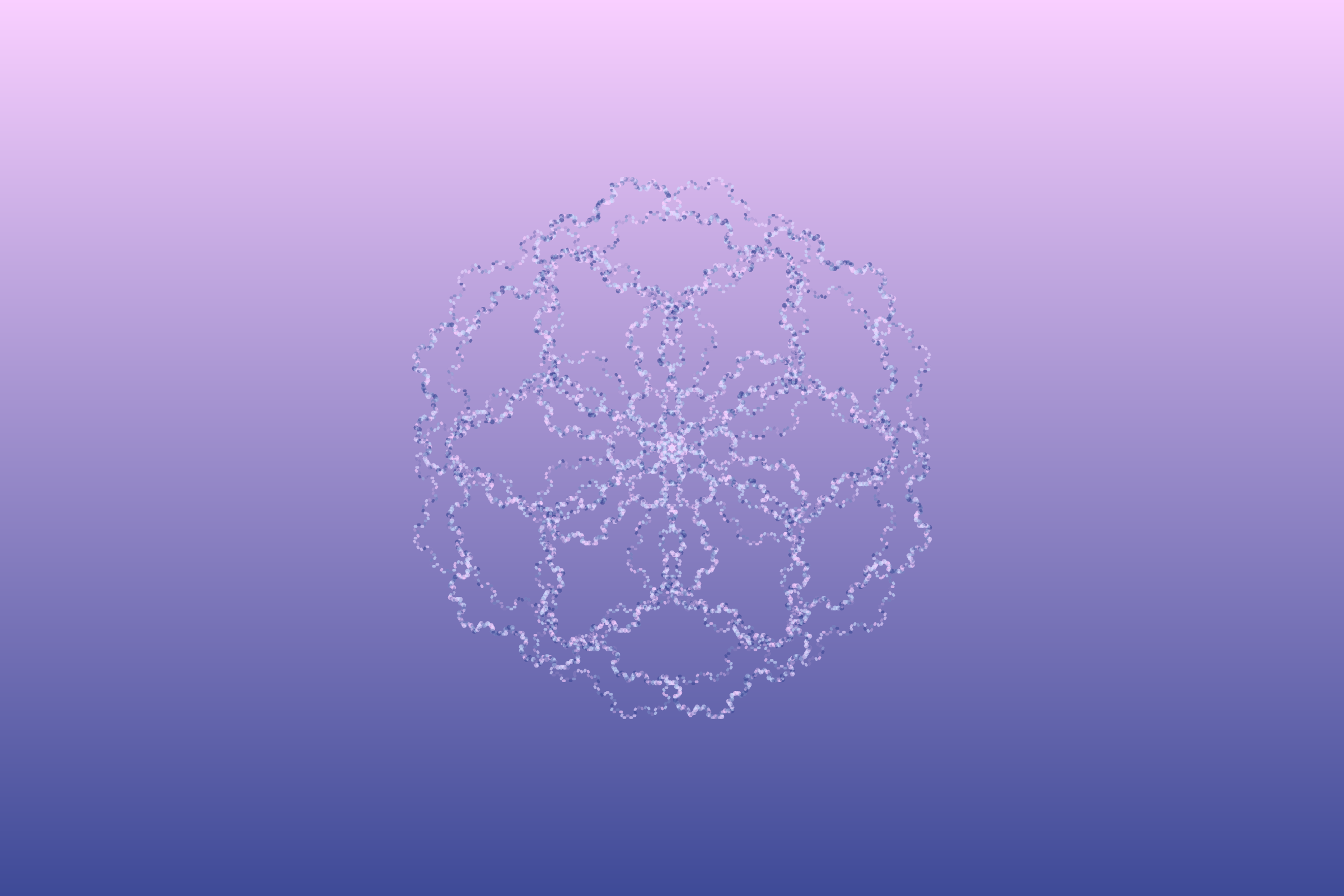

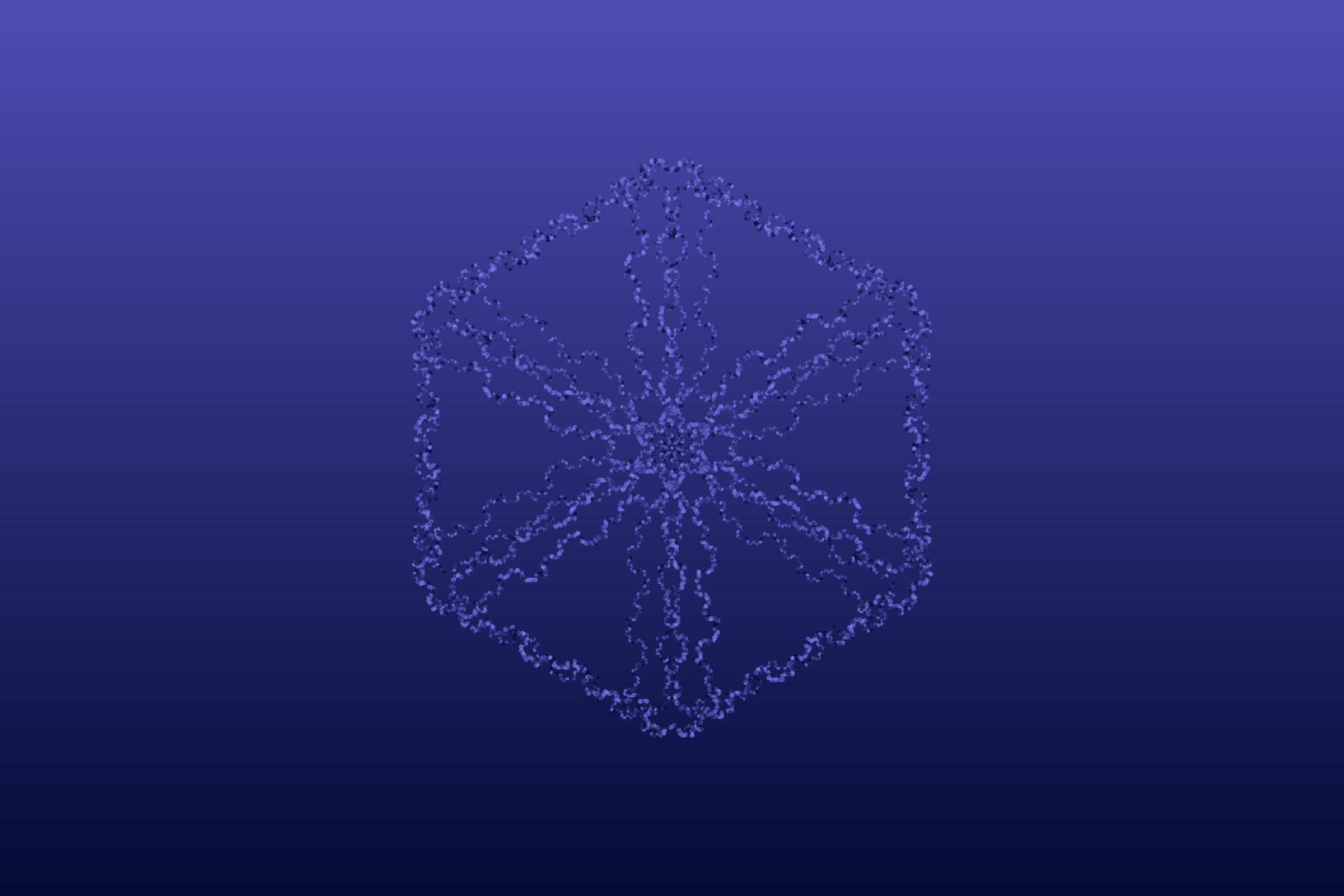

“We Are All Snowflakes” was a project I created for Kadenze, Inc. to promote several of their creative technology classes and serve as a fun, interactive gift for all the members during the holiday season.

In the spirit of the holiday season, the online arts and creative technology MOOC, wanted to create and share a fun project that highlighted some of the skills their users could gain from taking their classes. Specifically, the Introduction to Programming for the Visual Arts using P5js and Generative Art and Computational Creativity courses. This project was created using the P5JS JavaScript library spearheaded by Lauren McCarthy and the Processing Foundation, to bring the creative coding language Processing to the web in a native library. This amazing library makes it easy for beginners and advanced coders to create interactive and generative audio-visual pieces that can run in the browser and on mobile devices. I combined techniques from this intro course with some of the ideas from the generative arts course, specifically, the section that discusses Lindenmayer systems, often referred to as L-systems. These systems use simple rules for converting data, usually randomized strings, to generate natural looking patterns.

Build your own snowflake:

Essentially, a single grammar is generated and the system performs various search and replace functions to take a small pattern and transform it into an l-system. Then it begins drawing one point at a time. This in itself would only generate a single line, however, with some mirroring and rotation, we get the crystalline structure of a snowflake.

While researching snowflakes, I found the amazing work of Kenneth G. Libbrecht, a Professor of Physics at CalTech. Professor Libbrecht has done fascinating research on snowflakes and even grows his own. The color palette was heavily inspired by his own fine art photography of snowflakes. Sampling his color palette, I created a random gradient and color palette generator to parallel the overall visual aesthetic.

There is also a draw mode that allows users to draw their own snowflake and save it if they wish.

COLIN HONIGMAN CREATIVE TECHNOLOGIST LOS ANGELES